M3.06. Preparing the evaluation of the mentoring process

| Strona: | EcoMentor Blended Learning VET Course |

| Kurs: | Course for mentor in the sector of eco-industry |

| Książka: | M3.06. Preparing the evaluation of the mentoring process |

| Wydrukowane przez użytkownika: | Gość |

| Data: | piątek, 31 października 2025, 23:41 |

Spis treści

1. What is Evaluation

The purpose of evaluation is to facilitate an assessment of a mentoring programme and, through analysis of the collected data, conclusions are developed about the quality, value, significance and merit of the programme. These results can be disseminated to those who have a vested interest in the programme (stakeholders) and can be used to make improvements to the quality of the programme.

Evaluation is a key step in the mentoring programme; the outcomes of the evaluation could determine the fate of the programme. It is, therefore, essential that evaluation is done correctly, and the following pitfalls are avoided:

- Unfair assessments by the evaluator

- Incorrect evaluation methods used

- Misunderstanding of the data collected

- Misrepresentation of the data collected

- Corners are cut during the evaluation process due to lack of experience/resources

Evaluation is a valuable process because it provides an opportunity to use the evaluation results to make positive changes to a current mentoring programme or it may provide the incentive to roll out a piloted programme to more departments.

Mentoring programmes implemented within an organisation can vary in their size and structure. This variation can be caused by several factors, for example;

- Organisation size

- Number of departments within the organisation

- Perceived need for a mentoring programme

- Resource availability

- Length of time mentoring programme has been in place (i.e. may be piloted in one or two departments, before being rolled out within the whole organisation)

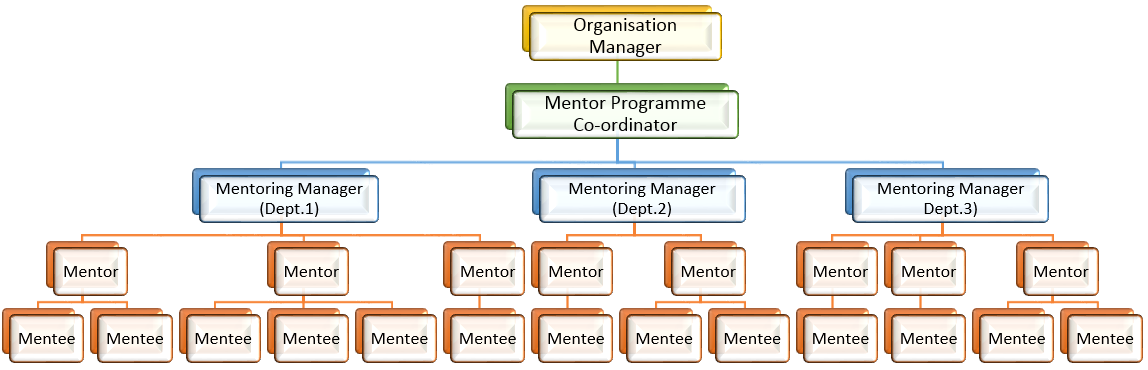

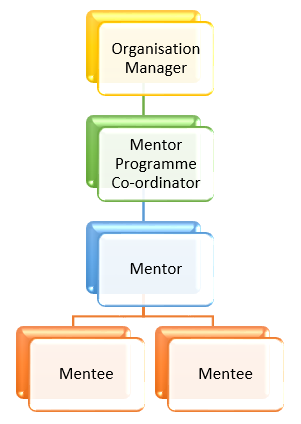

The following organograms show the potential variations between two different mentoring programme structures.

Organogram showing mentoring programme across three departments

Organogram showing a small mentoring programme

The evaluation process, using evaluative thinking, is a systematic approach which;

- Identifying assumptions about why you think your strategy, initiative or programme will work.

- Determining what change you expect to see during and after you implement what you set out to do.

- Collecting and analysing data to understand what happened.

- Communicating, interpreting and reflecting on the results.

- Making informed decisions to make improvements.

The evaluation is a collaborative approach, which involves several stakeholders, including;

- Parties involved in the funding of the programme

- Organisational staff

- Mentoring programme participants

- Board members

- Participating organisations (e.g. contracted external evaluators)

- Policymakers

The evaluation process should have a strong focus on utility; i.e. the outcomes of the evaluation should be practical and useful for end-users/stakeholders. Therefore, the evaluation should consider;

- Implementation and impact as an ongoing process and what your actions should build on, rather than replace

- Existing information systems to promote improvements that you can sustain over time

- Previous lessons learned from research along with the current expectations of the stakeholders

- Expected results, and how reality may differ from theory

- The value of the results which will be obtained through the evaluation process

The mentoring programme implemented within your organisation will influence the strategy for monitoring and evaluation. A small programme with only a few active users (e.g. mentor, mentee, mentoring manager) will need a different approach for evaluation than, for example, an organisation-wide mentoring programme with many participants, and many levels. Additionally, a mentoring programme may be formal or informal, irrespective of the number of people involved.

A formal mentoring programme is more likely to have policies and procedures in place, which will be valuable in planning for monitoring and evaluation. In this section, the methods and techniques for evaluating a mentoring programme will be discussed. This will allow you to make an informed decision when developing your own evaluation plan.

2. Principles and methods for evaluating the mentoring process

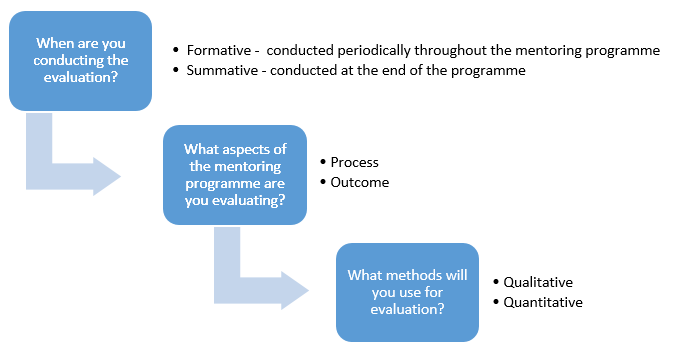

Evaluation can be done using formative or summative assessment.

| Formative assessment: |

Formative assessment is a continual form of monitoring, throughout the mentoring programme. |

| Summative assessment: |

Summative assessment is assessment which is conducted at the end of mentoring programme. |

Formative and summative evaluation can be done using two key methods of evaluation: Process and Outcome.

Process evaluations focus on whether a programme is being implemented as intended, how it is being experienced, and whether changes are needed to address any problems (e.g., difficulties in recruiting and retaining mentors, high turnover of mentees, high cost of administering the program).

Outcome evaluations focus on what, if any, effects programs are having. Designs may, for example, compare goals to outcomes or examine differences between mentoring approaches. Information of this sort is essential for self-monitoring and can address key questions about programs and relationships.

Process and outcome evaluations can be done using either:

- Qualitative assessment, or;

- Quantitative assessment

| Quantitative assessment: | Quantitative methods are those that express their results in numbers. They tend to answer questions like "How many?" or "How much?" or "How often?" |

| Qualitative assessment: | Qualitative methods don't yield numerical results in themselves. They may involve asking people for "essay" answers about often-complex issues or observing interactions in complex situations. |

Qualitative and quantitative methods are, in fact, complementary. Each has strengths and weaknesses that the other doesn't, and together, they can present a clearer picture of the situation than either would alone. Often, the most accurate information is obtained when several varieties of each method are used. That's not always possible, but when it is, it can yield the best results. Qualitative and quantitative methods are, in fact, complementary. Each has strengths and weaknesses that the other doesn't, and together, they can present a clearer picture of the situation than either would alone. Often, the most accurate information is obtained when several varieties of each method are used. That's not always possible, but when it is, it can yield the best results. |

Evaluation assessment methods

When determining the evaluation strategy, the mentoring relationship must be considered. The purpose of the mentoring programme basically is to promote the growth and satisfaction of the participants.

Mentoring relationships are successful and satisfying for all parties involved when certain factors are established, and both the mentor and the mentee take active roles.

The following factors are suggested as key indicators for mentoring programme participants to assess the effectiveness of their mentoring outcomes.

1. Purpose:

- Both partners are clear on the reasons they're meeting

- The roles and objectives have been discussed and agreed by both parties

- Both partners will recognise when they've completed their purpose.

2. Communication:

- The mentor and mentee communicate in the ways they both prefer

- They get back to each other in the timeframe they've agreed upon.

- Both parties listen attentively, and remember information

- Information exchange and conversation is two-way

- Nonverbal language is monitored (e.g. body language supports verbal conversation)

3. Trust:

- Information is welcomed and kept confidential.

- Commitments are honoured, and cancellations aren't made without compelling reasons

- Neither partner talks negatively or others, or is unfairly critical

- As the relationship develops, and trust develops, information sharing increases

4. Process:

- Meetings are regular and at a time which suits both parties

- Sessions are usually an appropriate length

- Both parties should enjoy the meetings.

- The mentor and mentee should be aware of the four stages of formal mentoring (building rapport, direction setting, sustaining progress and ending the formal mentoring part of the relationship) and should be working through them.

- Both parties should be satisfied with the pairing, and should regularly evaluate the pairing to ensure compatibility

5. Progress:

- The mentee of the partnership has identified appropriate life goals and is making significant progress towards building competencies to reach those goals.

- Both parties identify interesting learning experiences and process the results of these together.

6. Feedback:

- Both parties discuss and agree on the feedback format

- Feedback should be given in an honest and tactful manner and as frequently as agreed upon

- Feedback should be welcomed and neither party should be defensive, but instead take immediate steps to apply it.

Using these 6 key parameters, evaluation questions can be determined, and a measurement framework can then be created to identify the data sources; frequency of data collection; and the qualitative and quantitative measures of change. This information, along with data collection methods, analysis strategies and plans for reporting and communicating the findings, are compiled to form the evaluation plan.

After the evaluation plan is completed, data collection can start, followed by data analysis.

Some analyses can be linear and straightforward. For example, if the goal of the evaluation is to find out the impact of your organisation's mentoring program, then the sequence of activity is more or less linear, as noted below.

- Collect data from the stakeholders before the programme starts.

- Collect data halfway through the programme or at the end of the programme (or both).

- Analyse the data using statistics (e.g., percentages) and coded qualitative data.

|

The next few stages after data collection and analysis relate to communication and interpretation of findings and making informed decisions about improvements and next steps. The reporting, interpretation and reflection of evaluation findings will be discussed in-depth in the "Conducting an Evaluation of the Mentoring Process" section.

2.1. Focus of Analysis

The focus of analysis is the subject of the evaluation analysis, including; an organisation, staff, a programme model, a policy or strategy, etc. The evaluation focus in not limited to one subject and can incorporate one or more of these subjects at the same time.

Depending on the scope and timeframe of the effort and expectations of all the key stakeholders, you can decide the focus of the evaluation.

When the focus of analysis is the individual:

- You are documenting the changes that individuals experience.

- Your evaluation will usually want to assess two things:

- the degree to which the strategy, initiative or programme is being implemented accordingly and

- if the individual participants experienced the desired outcomes.

When the focus or unit of analysis is the organisation:

- Evaluation of changes in your organisation's priorities, policies and practices.

- The evaluation process should be used to collect and analyse data on key outcome indicators such as how successful the organisation has been in;

- Engaging and sustaining the involvement of different groups of people

- Providing the resources for a mentoring programme

- Developing the key documentation and guidance (e.g. policy and strategy) for the programme

- Providing and/or supporting growth and opportunities for mentees

When the focus of analysis is the mentoring programme:

- To evaluate these new or adapted initiatives or programs, you could conduct a study to understand:

- How the effort was implemented.

- What elements made it effective.

- What worked and didn't work and why.

- What knowledge, skills and other capacities are required of the staff to implement the effort.

- Most important, how to refine the effort.

- Much of the data collected could be qualitative. When the initiative's or program's elements have become final and stable, you can conduct an outcome or summative evaluation and combine the use of a quasi-experimental design using the methodology above to assess its effectiveness.

A quasi-experimental design assesses the causal effects of a programme by comparing two groups of participants (a "treatment" group and a "comparison" group) or by comparing data collected from one group of participants before and after they participated in the program. A quasi-experimental design assesses the causal effects of a programme by comparing two groups of participants (a "treatment" group and a "comparison" group) or by comparing data collected from one group of participants before and after they participated in the program. |

2.2. Internal Versus External Evaluation

Internal evaluation is conducted by a staff person within the organisation that is conducting the programme or entity being evaluated, whereas an external evaluation is conducted by

an evaluator who is not an employee of that organisation.

Whether an organisation should conduct an internal or external evaluation usually depends on the available resources, qualifications of the internal or external evaluator, scope of the evaluation and the funder's requirements. However, other factors are equally important in

making this decision:

- External evaluators can bring a broader perspective while internal evaluators tend to have intimate knowledge about the context that the strategy, initiative or programme is operating within.

- External evaluators can be perceived as threatening while internal evaluators can be perceived as being less objective.

Sometimes, you have no choice because the funder requires an external evaluation. Regardless of whether an internal or an external evaluator is selected, clear lines of accountability

must be established from the outset.

3. Stakeholders

As multiple stakeholders are involved in evaluation, you must carefully consider how the information and findings you share with the stakeholders, including your evaluator, can be used (or misused). Therefore, you should ensure that the data you collect for the evaluation cannot be taken out of context or misconstrued. If you have a designated evaluator, you should work with him or her on this issue.

The first step in the process of identifying and engaging stakeholders is to answer the question:

You should consider who is interested in learning about the evaluation of the programme, and identify who is interested in the answers to questions such as:

- Is the mentoring programme as planned? Who stands to benefit or lose from the way the programme is run?

- Are the participants benefiting from programme implementation, as desired?

- Who stands to benefit or lose if they do or do not?

- Is the mentoring programme making an impact? Who stands to benefit or lose if it does or does not make an impact?

- Might the results affect policies? Who stands to benefit or lose if it does or does not?

Consideration of Different Types of Stakeholders

The following table may help identify stakeholder needs for the mentoring programme in your organisation.

| Stakeholders | Why are they interested in learning about how your programme is doing? |

| Funding bodies | They want to know if their investments were put to good use. If the mentoring programme is not reaching expectations, they would want to know why and make decisions about continuing to fund the programme or building the program's capacity (or not) based on the findings. |

| Programme Staff | They want to know if they are doing their work properly and if they are bringing about positive changes to the mentees, as set out in the mentoring plan. If not, they would want to use the information to improve their programme. |

| Board members | They want to learn about the progress of the programme and the impact it is making (or not) to their employees (mentees). If it is having an impact, they would want to use this information for their fundraising efforts. If not, they would want to address the issues the programme is facing. |

| Programme participants | The mentees want to tell you if and how the programme is helping them meet their career goals. They want to know if the findings match what they experienced. If not, they would want to help the programme capture more accurately the outcomes they experienced. |

Use of Evaluation by Different Types of Stakeholders

Different stakeholders probably would use evaluation for different purposes, producing certain

benefits but also potential risks that could be avoided if you had a strategy to deal with them first.

The following table provides general examples to help you think through the benefits and risks for each group and how you can avoid the risks, to the best of your ability, as every situation is different.

Benefits and Potential Risks of The Evaluation

| Stakeholders Group | The evaluation results can help this group by . . . |

The evaluation results can put this group at risk by . . . |

To reduce the risks, you should . . . |

| Funding bodies | Demonstrating that the grants have been useful and used effectively. |

Highlighting that the funding body may be funding grants that do not work, and funding may be withdrawn. | Engage the programme officers early on to understand what they consider success and discuss their information needs. |

| Programme Staff | Showing that their efforts in the programme have been making a difference. | Showing that their work has not been making a difference. This could lead to budget and possibly even staff cuts. |

Work with the staff early on to ensure the evaluation questions align with resource requirements. |

| Board members | Identifying areas where the organisation is making a difference. | Identifying areas of weaknesses in the non-profit organisation's leadership, administrative and financial systems and day-to-day operations. |

Engage board members in the design and implementation of the evaluation. |

| Mentees | Strengthening, continuing or expanding programs that they can continue to participate in and benefit from. |

Eliminating opportunities afforded by the programs. |

Engage them early on so they can envision what a successful mentoring programme looks like, and to show them what benefits there are for them if they participate. |

Please take the time to complete a risk table, using table 2 as an example. You should work with each group of stakeholders to plan and take steps to prevent misunderstandings about the evaluation and misuse of the findings. Please take the time to complete a risk table, using table 2 as an example. You should work with each group of stakeholders to plan and take steps to prevent misunderstandings about the evaluation and misuse of the findings. |

3.1. How to Identify Key Stakeholders

When determining who would qualify as a stakeholder, you should determine what your needs are to assess who you should involve. Since stakeholders offer different kinds of value, your reasons for inviting certain individuals or groups to participate should include the following:

- They have knowledge of the programme being evaluated (e.g. staff and any expert consultants you hired).

- They represent a variety of perspectives and experiences

- They are affected by the programme (e.g., programme participants).

- They are in positions of influence (e.g., board members, funding bodies).

- They are supporters of the evaluation process, and are involved in the evaluation's design and implementation

- They are responsible for decisions about the evaluation and programme (e.g., programme director, management).

4. Developing Evaluation Questions, Measurement Framework and Evaluation Plan

Evaluation is a key tool that will be used throughout the lifetime of a mentoring programme, therefore it is essential that the tools used for evaluation, allow the process to be effective.

Using a logic model results in effective design of the effort and offers greater learning opportunities, better documentation of outcomes and shared knowledge about what works and why. More important, the logic model helps to ensure that evaluative thinking is integrated into your evaluation design and implementation.

A logic model is a graphic representation of the theory of change, illustrating the relationship between resources, activities, outputs and short-, intermediate- and long-term outcomes. A logic model is a graphic representation of the theory of change, illustrating the relationship between resources, activities, outputs and short-, intermediate- and long-term outcomes. |

A logic model helps break down the programme implementation in a systematic way by highlighting the connections between the needs, programme resources, activities, outputs, outcomes and the long-term impact of a mentoring programme. By doing this, evaluation questions will be addressed, such as:

- How should the programme work?

- What are the differences between the planned process for implementation, versus reality?

- Where are there gaps or unrealistic expectations in the implementation strategy?

- Which areas of the logic model seem to be yielding the strongest outcomes or relationships to one another?

- Which areas of the model are not functioning in practice?

- Are there key factors that have not been embedded in the programme strategy that should be?

By organising evaluation questions based on the logic model, you are better able to determine

which questions to target in an evaluation.

There is another consideration in developing evaluation questions. The questions also depend

on the type of evaluation you want to conduct - a performance evaluation, a process or formative evaluation or an outcome or summative evaluation.

A performance evaluation is concerned with:

- Ensuring accountability

- Demonstrating that resources are used as intended and are managed well

- Monitoring and reporting on progress toward established goals

- Providing early warning of problems to management

A process or formative evaluation is concerned with:

- Understanding if a programme is being implemented as planned, and according to schedule

- Assessing if the programme is producing the intended outputs

- Identifying strengths and weaknesses of the programme

- Informing mid-course adjustments

An outcome or summative evaluation is concerned with:

- Investigating whether the programme achieved the desired outcomes and what made it effective or ineffective

- Making mid-course adjustments to the effort

- Assessing if the effort is sustainable and replicable

A logic model is a graphic representation of the theory of change, illustrating the relationship between resources, activities, outputs and short-, intermediate- and long-term outcomes.

4.1. Two Different Ways to Formulate Evaluation Questions About Your Programme

Here is a method to help formulate evaluation questions. Look at the logic model and start with the following five elements:

- Who - Who was your strategy initiative, or programme intended to benefit?

- What - What was the effort intended to do? What was the context within which the effort took place and how could it have affected its implementation and outcomes?

- When - When did activities take place? When did the desired changes start to occur?

- Why - Why is the effort important to your organisation or community? Why might it be important to people in other organisations or communities?

- How - How is the effort intended to affect the desired changes or bring about the desired outcomes?

Here are examples of evaluation questions to ask for the previous sample scenario:

Another method for formulating evaluation questions is to consider different aspects of your strategy, initiative or programme and generate questions about each of these aspects.

By using the logic model, and knowing the questions you want answered, you can develop a measurement framework, as an evaluation planning tool. Developing a measurement framework will allow you to determine how to assess progress toward achieving outcomes and answer the evaluation questions.

With a measurement framework for your effort in hand, you get a clear picture of how to conduct your evaluation. The measurement framework provides another opportunity for stakeholders to further define outcomes. With it, you can consider what the outcome means in more concrete terms.

Key Components of the Measurement Framework

Seven key components make up the measurement framework:

- Outputs are direct products of activities and may include types, levels and targets of services to be delivered by the strategy, initiative or program.

- Outcomes are the immediate, intermediate and long-term changes or benefits you need to document. These outcomes should be the same ones identified in the logic model.

- Indicators are markers of progress toward the change you hope to make with your strategy, initiative or program.

- Measures of change are values - quantitative and qualitative - that can be used to assess whether the progress was made.

- Data collection methods are the strategies for collecting data. This could include quantitative methods, such as conducting surveys or analysing existing data, or qualitative methods, such as conducting interviews or a document analysis.

- Data sources are the locations from which (e.g., national database, programme survey), or people from whom, (e.g., programme participants), you will obtain data.

- Data collection frequency is how often you plan to collect data.

How to Use a Measurement Framework

Once you have identified your outputs and immediate, intermediate and long-term outcomes,

you can list each output and outcome on the measurement framework in the first column. After you have listed each one, you can make a clear plan for assessing progress toward that particular output or outcome. This involves moving across the rows of the measurement framework from left to right to identify indicators, measures of change, data collection methods, data sources and data collection frequency for each outcome. Please note that as you complete the measurement framework, some components could contain overlapping responses. For example, the data source for two outcomes may be the same.

When developing the evaluation questions, the following four categories for measuring mentoring programmes should be considered;

- Relationship processes

- Programme processes

- Relationship outcomes

- Programme outcomes

The following table illustrates the areas of evaluation for these categories.

| Category | Evaluation |

| Relationship processes | What happens in the relationship? For example: how often does the pair meet? Have they developed sufficient trust? Is there a clear sense of direction to the relationship? Does the mentor or the mentee have concerns about their own or the other person's contribution to the relationship? |

| Programme processes | For example, how many people attended training? How effective was the training? In some cases, programme processes will also include data derived from adding together measurements from individual relationships, to gain a broad picture of the situation |

| Relationship outcomes | Have mentor and mentee met the goals they set? Some adjustment may be needed if circumstances evolve, and the goals need to change slightly.) |

| Programme outcomes | Has the programme, for example, increased retention of key staff, or raised the competence of the mentees? |

5. Evaluation Plan

Using the logic model, the evaluation questions, and the measurement framework tool as the basic components, you can now start to develop an evaluation plan that pulls all of these and more together.

A good evaluation plan should have the following elements:

- Background Information about the programme

- Evaluation Questions: Specific questions that are measurable.

- Evaluation Design: Data collection methods; types of data that will be collected; sampling procedures; analysis approach; steps taken to ensure accuracy, validity and reliability; and limitations.

- Timeline: Completion dates and time ranges for key steps and deliverables.

- Plan for communicating findings and using results

- Evaluator/evaluation team: Specify who is responsible for conducting the evaluation process and what this role entails. This might be an external evaluator, internal evaluator or internal evaluator with an external consultant.

- Budgetary Information: Could include expenses for staff time, consultants' time, travel, communications, supplies and other costs (e.g., incentives for participants, translation and interpretation time).

Parts of your evaluation plan can be copied and used to write your evaluation report. The table below shows the general outlines for the evaluation plan, and the evaluation report.

| Evaluation Plan | Evaluation Report |

| Cover Page: Includes clear title; name and location of the strategy, initiative or program; period to be covered by evaluation. | Cover Page: Includes clear title; name and location of the strategy, initiative or program; period covered by evaluation or date evaluation was completed. |

| Background Information of the Effort: Purpose of the evaluation, origins and goals of effort, activities and services, including theory of change and logic model. | Executive Summary: Brief, stand-alone description of program, outline of evaluation purpose and goals, methods, summary of findings and recommendations. |

| Evaluation Questions: Specific questions that are measurable, might need to be prioritised to focus resources and keep evaluation manageable. | Introduction and Background: Purpose of the evaluation, origins and goals of effort, target population, activities and services, review of related research, evaluation questions and overview and description of report |

| Evaluation Design: Data collection methods; types of data to be collected; sampling procedures; analysis approach; steps taken to ensure accuracy, validity and reliability; and limitations. | Evaluation Design: Data collection methods, types of data collected, sampling procedures; analysis approach; steps taken to ensure accuracy, validity, and reliability; and limitations (include the theory of change and logic model). |

| Timeline: Completion dates or time ranges for key steps and deliverables. | Evaluation Results: Evaluation findings, evaluation questions addressed, visual representations of results (e.g., charts, graphs, etc.). |

| Plan for Communicating Findings and Using Results to Inform Work: Details regarding what products will be developed and what will be included in each product. Potential users and intended audience include programme staff and administration, program participants, community leaders, funders, public officials and partner organisations. | Summary, Conclusion and Recommendations: Summary of results, implications of findings, factors that could have shaped results, clear and actionable recommendations. |

| Budgetary Information: Might include expenses for staff time, consultants' time, travel, communications, supplies and other administrative costs. (If the plan is to be shared with a wider group of people, information about staff and consultants' time may be excluded.) | |

| Evaluator and Evaluation Team: Specifications regarding who is responsible for conducting the evaluation process and what this role entails. This may be an external evaluator, internal evaluator or internal evaluator with an external consultant. |

Various, surprising things can affect how long it takes to implement and complete an evaluation. When developing the outline of the evaluation, you should consider the following potential barriers:

- Reluctant participation in the evaluation process by stakeholders

- Evaluation answers not guaranteed to be honest

- Participants might find it difficult to give negative responses

- Anonymity is harder to achieve with a small mentoring programme

- Participants may find it difficult to self-reflect

- Programme strategy may be unclear or inadequate

- Low response rate to surveys

- Long term output success is harder to determine when evaluation is done earlier in the mentoring programme lifecycle

5.1. Evaluation Type

The type of evaluation you want depends on the stage that your strategy, initiative or programme is in and the purpose of the evaluation. Evaluation type is not the same thing as evaluation

methodology. Once you determine the type you should use – and depending on what you want to accomplish through the evaluation process – you can decide on the approach to use.

Essentially, there are three major types of evaluation and each serves a specific function and

answers certain questions:

- Performance monitoring

- Process or formative evaluation

- Outcome or summative evaluation

Sometimes, it could be necessary to conduct these three types of evaluation simultaneously.

Below is a table which highlights the key types of evaluation, their purpose, the types of information that they will gather, and when they would be conducted.

| Purpose | Kinds of Questions Answered | Timing | |

| Performance monitoring |

|

|

Performance monitoring can be conducted throughout the strategy, initiative or program period, from beginning to end. |

| Process or Formative Evaluation |

|

|

Process or formative evaluation should be conducted at the start-up period and while the programme elements are still being adapted. |

| Outcome or Summative Evaluation |

|

|

Outcome or summative evaluation should be conducted when immediate and intermediate outcomes are expected to emerge, usually after the effort has been going on for a while, or when it is considered "mature" or "stable" (i.e., no longer being adapted and adjusted). |

5.2. Evaluation Plan

Purpose and Scope

In general, the purpose of your evaluation should be to establish the outcomes or value of the programme you are providing and to find ways to improve the program. Both a formative and a summative evaluation should be designed.

Defining the purpose of your evaluation will help you focus and delineate the other steps in the evaluation process. The scope of your evaluation must also be determined. It may be narrow or broad; it may focus on all students or targeted groups. A narrow, focused evaluation might seek answers to questions such as:

- How successful has the mentor/mentee pairing been?

- What has been the impact of mentor training?

- Have the mentor/mentee achieved the objectives laid out in their mentoring plans? (measuring mentor/mentee performance)

- Has the programme had a positive impact on, for example, employee competence?

The scope of your evaluation can also be very broad; for example: measuring the success and impact of the mentoring programme.

The scope of an evaluation is often determined by the amount of resources available to you. The larger and more involved the evaluation, the costlier it will be in terms of time and money. If minimal resources are available, consider a more focused and less involved evaluation process.

Method

In your evaluation plan, you should decide what information that you want to know, what methods you will use to gather that information, and the evidence you will use to support the information. For example:

| Evaluation Criteria | Method of Evaluation | Supporting Evidence |

| Have the mentees found the mentoring programme to be a useful tool? | Questionnaire/ Questions |

|

| Has the mentoring programme improved mentee career growth within the company? | Data analysis |

|

| Has the mentor met all their expectations? |

|

|

| Has the mentee met all their expectations? |

|

|

Data

Your evaluation questions determine your data sources. Once your questions are specified, your next step is to determine who or what can best provide information that lead to answering the questions. Some potential data sources include:

- Mentor

- Mentee

- Mentor line manager

- Colleagues

- Upper management

Data sources might also include records about your programme such as the number of career development plans completed and signed, or time spent on a career development plan. Data sources can also include the review of records that have been kept by others such as;

- Attendance to training programmes

- Attendance to conferences and events relating to their job role